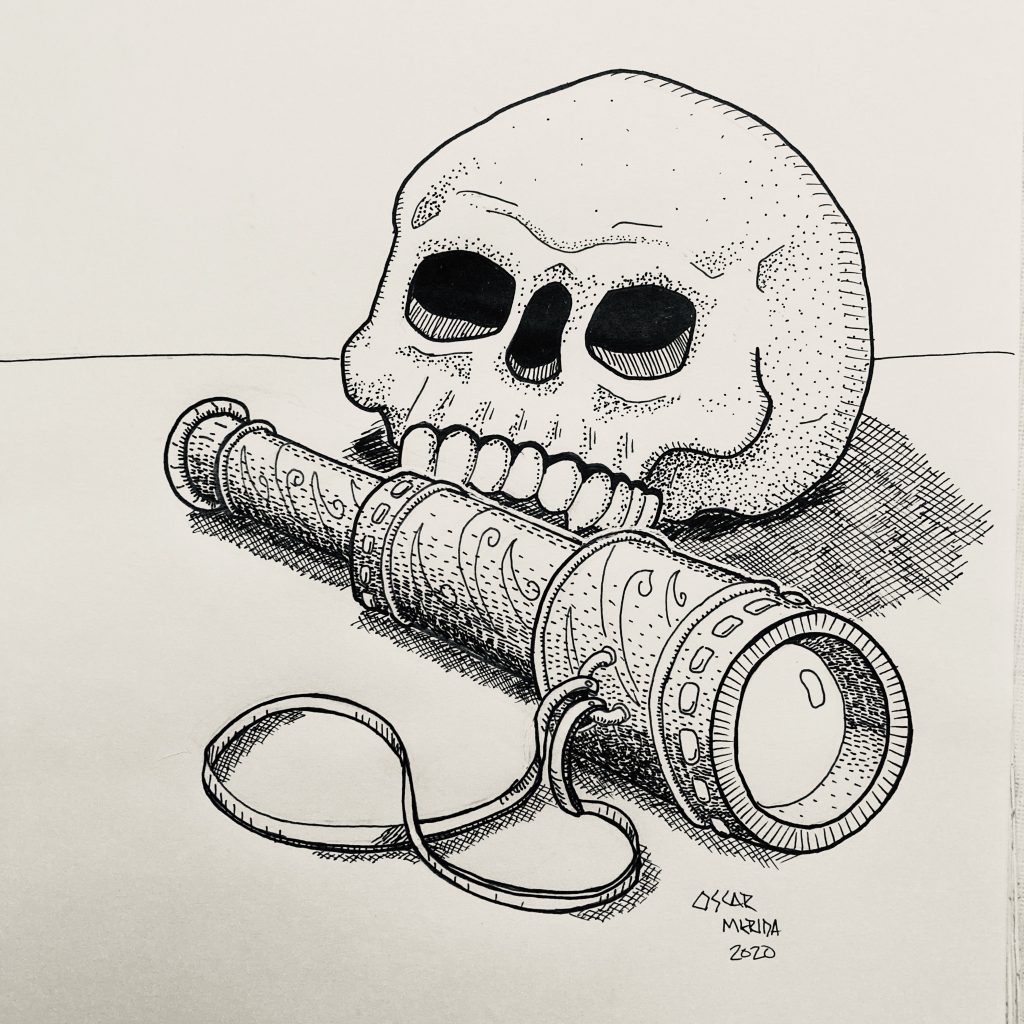

Cursed

day 3, cursed. This spyglass lets you see the future but takes away your memory. #adventurertober2020

Random DnD Monuments

For fun, I dove into procedural text generation using Improv. It works well enough – the fact that grammars are JSON files is tedious but you can create some interesting bits of text at random. After getting the hang of it, I went down the rabbit hole to generate descriptions of monuments a party might