Uncategorized

Testing HTTP Redirects in PHP

For a task today, I wanted to verify that redirects on a website were working as expected after migrating them from one redirect plugin to another one (yes, this site had 2 enabled). I couldn’t figure out the right invocation to test them using cURL in PHP, even though the documentation seemed straightforward (just set

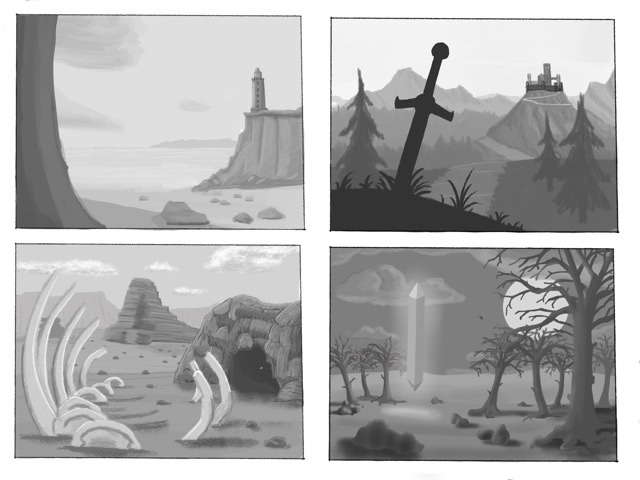

Random DnD Monuments

For fun, I dove into procedural text generation using Improv. It works well enough – the fact that grammars are JSON files is tedious but you can create some interesting bits of text at random. After getting the hang of it, I went down the rabbit hole to generate descriptions of monuments a party might

Get the First Page of a PDF as an Image

Easy with image magick: # get the first page as a PNG convert -resize 1275×1650 “example.pdf”[0] cover.jpg # make the smaller version convert -resize 155×200 cover.jpg

Measuring developer productivity

My friend Sandy shared a link to a fascinating natural experiment comparing the productivity of two similarly tasked developer teams. If you haven’t read it already, take a minute to check it out. I’ve seen this need for visibility throughout my career. The cable company was a rare laboratory, you could observe a direct comparison between

Colorized Word Diffs

I’ve been finding myself doing a lot for copy and tech editting. I needed a way to annotate a PDF based on the changes I’d made to our markdown source. Trying to eyeball this was difficult, and I checked if there was a way to do word-by-word diffs based on SVN output. Remember, SVN’s diff

Highest attended soccer matches in the USA

This started as a reply to a reddit poster claiming a USA-Turkey match in 2010 was “the highest attended soccer match ever” According to this the attendance was 55,407. Nice, but not the highest ever for soccer.http://www.ussoccer.com/news/mens-national-team/2010/05/turkey-game-report.aspx But not the larget for soccer that I can find. Portugal played the USA at RFK during the